Home

SSL-Terminated ALB with SNI on a Kubernetes/kOps Cluster

When I went to go set this up recently I found much of the available info to be out-of-date; thankfully things have changed for the better, as I eventually found the solution to be much simpler than it apparently used to be in the not-so-distant past. Writing this to save someone else a few hours of their life.

My goal was to run two simple webservers, with an ALB in front of them terminating SSL using ACM certs. This release screencast shows how to do the ALB setup via the GUI, I wanted to accomplish the same using k8s.

I found that if you’re using kOps you can now use the awsLoadBalancerController and certManager add-ons in your cluster definition without needing to install anything via helm. You will also need to attach an additional IAM policy for Server Name Indication (SNI) to work properly.

If you’re using EKS, GKE, or any other managed control plane, it looks like there are additional setup requirements that I don’t cover here.

Cluster Definition

Assuming you’ve setup kOps locally, and also assuming you are deploying a cluster into an existing VPC with an existing Internet Gateway:

#!/bin/bash

KOPS_STATE_STORE="s3://your-kops-state-store"

CLUSTER_NAME="your-cluster.example.com"

VPC_ID="your-vpc-id"

MASTER_SIZE="t3.medium"

NODE_SIZE="t3.medium"

ZONES="af-south-1a,af-south-1b" # at least two required for use with ALB

kops create cluster --cloud aws --zones=${ZONES} \

--name=${CLUSTER_NAME} \

--vpc=${VPC_ID} \

--ssh-public-key="~/.ssh/id_rsa.pub" \

--state=${KOPS_STATE_STORE} \

--master-size=${MASTER_SIZE} \

--node-size=${NODE_SIZE} \

# --yes # remove for dry-run

This wil create two new subnets in the existing VPC, placing a worker node in each subnet and a master node in either. Somewhere in the AWS documentation it says that at least two availability zones are required for the ALB to be created successfully; I didn’t take the time to see whether that is a hard and fast rule.

After you create this cluster, you’ll want to kops edit cluster. Ensure you have two subnets; your CIDRs and regions will be different, and I think they could be type: Private since all traffic to them will be routed through the ALB, but I haven’t locked this down for security:

subnets:

- cidr: 10.0.32.0/19

name: af-south-1a

type: Public

zone: af-south-1a

- cidr: 10.0.64.0/19

name: af-south-1b

type: Public

zone: af-south-1b

Then add add the following:

awsLoadBalancerController:

enabled: true

certManager:

enabled: true

You must include certManager as a prerequisite for awsLoadBalanceController. Apparently, built-in support for doing this directly in the cluster definition is relatively new; the process to get this working only a year ago looks extremely arduous from what I’ve read elsewhere.

Even though this allows for ALB use without needing to install components manually, you still need to ensure that whichever IAM identity you are using for orchestration has permissions to do all the things involved with ALB setup; as of this writing, the policy document that you should attach to your IAM user is:

curl -o iam_policy.json https://raw.githubusercontent.com/kubernetes-sigs/aws-load-balancer-controller/v2.4.1/docs/install/iam_policy.json

(Source: this EKS page; using kOps instead of EKS makes everything else on that page irrelevant here).

Going back to kops edit cluster, for SNI to work properly, you’ll also need to attach this additional policy:

additionalPolicies:

master: |

[

{

"Effect": "Allow",

"Action": [ "elasticloadbalancing:AddListenerCertificates", "elasticloadbalancing:RemoveListenerCertificates"],

"Resource": ["*"]

}

]

Save the cluster definition and update: kops update cluster --yes && kops rolling-update cluster.

Object Definitions

Here’s everything needed for a demonstration:

apiVersion: v1

kind: List

items:

- apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: web

name: web

spec:

replicas: 1

selector:

matchLabels:

app: web

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app: web

spec:

containers:

- image: gcr.io/google-samples/hello-app:1.0

name: hello-app

resources: {}

- apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: web-mq

name: web-m1

spec:

replicas: 1

selector:

matchLabels:

app: web-mq

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app: web-mq

spec:

containers:

- image: gcr.io/google-samples/hello-app:1.0

name: hello-app-mq

resources: {}

- apiVersion: v1

kind: Service

metadata:

creationTimestamp: null

labels:

app: web

name: web

spec:

ports:

- port: 8080

protocol: TCP

targetPort: 8080

selector:

app: web

type: NodePort

- apiVersion: v1

kind: Service

metadata:

creationTimestamp: null

labels:

app: web-mq

name: web-mq

spec:

ports:

- port: 8080

protocol: TCP

targetPort: 8080

selector:

app: web-mq

type: NodePort

- apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: example-ingress

annotations:

kubernetes.io/ingress.class: alb

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/listen-ports: '[{"HTTP": 80}, {"HTTPS":443}]'

alb.ingress.kubernetes.io/actions.ssl-redirect: '{"Type": "redirect", "RedirectConfig": { "Protocol": "HTTPS", "Port": "443", "StatusCode": "HTTP_301"}}'

#alb.ingress.kubernetes.io/certificate-arn: 'arn1,arn2,...'

spec:

rules:

- host: foo.example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: web

port:

number: 8080

- host: bar.example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: web-mq

port:

number: 8080

Notes on the above:

- The hostnames you use must be domains whose nameservers you control

- The commenting out of

alb.ingress.kubernetes.io/certificate-arnis what tells the ALB to use SNI based on the-host:entries provided here. If you uncomment that line, you can provide a list of cert ARNs separated by commas.

Apply the above using kubectl apply -f <filename>. You should see everything created successfully, but if you see this error:

Error from server (InternalError): error when creating "demo.yaml": Internal error occurred: failed calling webhook "vingress.elbv2.k8s.aws": failed to call webhook: Post "https://aws-load-balancer-webhook-service.kube-system.svc:443/validate-networking-v1-ingress?timeout=10s": dial tcp 100.65.197.84:443: connect: connection refused

You can get around it by deleting the validation webhook:

$ kubectl delete -A ValidatingWebhookConfiguration aws-load-balancer-webhook

validatingwebhookconfiguration.admissionregistration.k8s.io "aws-load-balancer-webhook" deleted

(This seems non-ideal, and it probably is, but an understanding of this issue eluded me; I’m guessing there’s a version mismatch somewhere.)

There is no external DNS component in use here, which means you’ll have to grab the ALB public hostname from kubectl get ingress and add CNAME records aliasing your domains/subdomains to it. There is also no auto-creation of ACM certs (which must be in the same region as the VPC); I’m sure auto-creation is possible somehow, but without it, you’ll have to make sure the certs are pre-created in ACM beforehand.

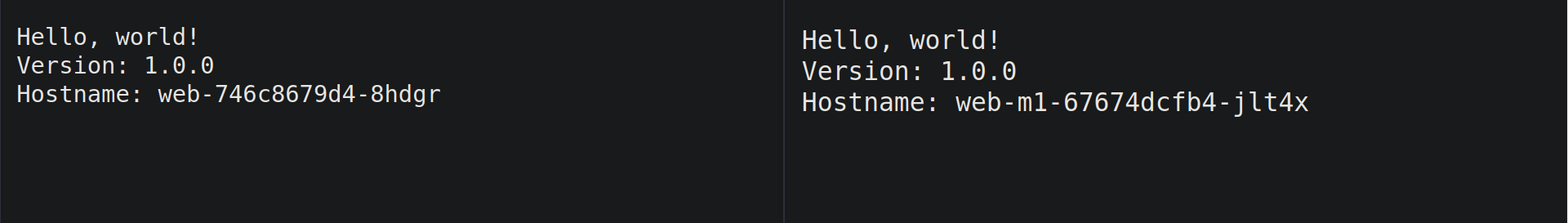

Once the CNAME records have propagated and the ALB is online, you should be able to pull up both domains in your browser side-by-side, confirm that they have been redirected to HTTPS successfully, and see something like this: